Our Technology Solutions

How We Can Help Your Business

Implementation Services

- End-to-end ERP implementation

- Business process analysis & mapping

- Data migration & integration

- Custom module development

- Industry-specific ERP solutions

Support Services

- 24/7 technical assistance

- Bug fixing & troubleshooting

- Performance optimization

- User training & adoption support

- Regular system health checks

Upgradation Services

- Migration from legacy ERP systems to Dynamics 365

- Upgrade to latest Business Central versions

- Add-on & extension updates

- Testing & validation post-upgrade

- Smooth transition with minimal downtime

Cloud Services

- Azure Infrastructure setup & migration

- Backup, disaster recovery & security

- Power BI & advanced analytics on Azure

- AI, ML & automation on Azure

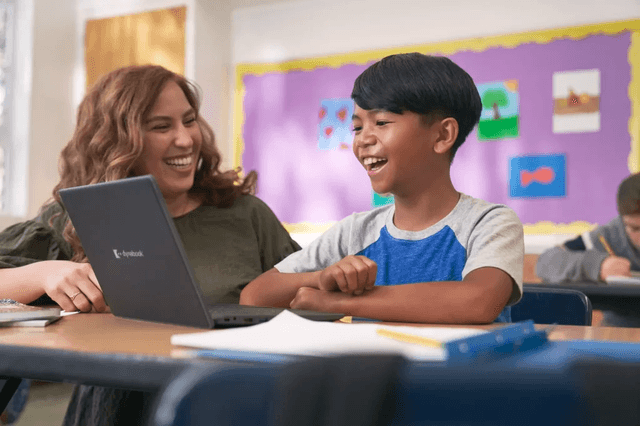

Vatsin Mission & Goal

At Vatsin, our mission is to empower businesses through innovative software solutions that streamline operations, foster growth, and drive success. With a commitment to excellence and customer satisfaction we strive.

Our Approach

Data - driven diagnostic and predictive app for improving outcomes Data driven diagnostic and predictive app for improving.

Data - driven diagnostic and predictive app for improving outcomes Data driven diagnostic and predictive app for improving.

Data - driven diagnostic and predictive app for improving outcomes Data driven diagnostic and predictive app for improving.

Data - driven diagnostic and predictive app for improving outcomes Data driven diagnostic and predictive app for improving.

Data - driven diagnostic and predictive app for improving outcomes Data driven diagnostic and predictive app for improving.

We Use Technologies

PHP

JavaScript

TypeScript

React JS

NextJS

React Native

Flutter

Azure

Azure DevOps

Azure Storage

Azure Backup

Azure AD

Azure AD B2C

Cosmos DB

Swift

React Native

Flutter

Power Platform

Power Automate

Power Apps

Power Fx

Power Pages

Power BI

Dataverse

Copilot

Business Central

Finance

Finance Operations

Supply Chain

Sales

Marketing

Core HR

Customer Service

Commerce

500+ customers transformed Digitally by Vatsin

Need a Support?

We specialize in Microsoft Dynamics 365 (BC, F&O, CE), Power Platform (Power Apps, Power BI, Power Automate), Azure, AI integration, custom application development, DevOps implementation, and expert talent recruitment in Microsoft technologies.

Yes, we provide complete ERP implementation from discovery and solution architecture to deployment, customization, integration, training, and ongoing support.

Absolutely! We have deep expertise in legacy system upgrades and seamless migration to Dynamics 365 Business Central or Finance & Operations.

We serve a wide range of industries including Manufacturing, Retail, Finance, Distribution, Real Estate, and Professional Services.

Yes, we develop tailor-made web and mobile applications integrated with Microsoft platforms and APIs based on your business needs.

It's an all-in-one ERP solution that helps small to mid-sized businesses manage finance, operations, inventory, sales, and customer service on a unified platform.

Yes, we provide custom integration services with third-party tools, CRMs, payment gateways, logistics software, and more using APIs and middleware.

Yes, we integrate AI tools such as predictive analytics, intelligent automation, chatbot solutions, and ML algorithms into your existing systems to enhance decision-making.

We offer flexible post-go-live support including managed services, dedicated support engineers, and SLA-based maintenance contracts.

Yes. We offer pre-vetted, certified professionals in Dynamics 365, Azure, Power Platform, and full-stack development—available onsite, offshore, or hybrid.

Yes, we specialize in fast turnaround for contract and full-time Microsoft technology hiring based on your budget and urgency.

Yes, we have access to seasoned professionals including D365 Solution Architects, Functional Leads, and Project Managers with industry-specific expertise.

We can kick off discovery and initial assessment within 3–5 business days after scoping and agreement.

Absolutely. We encourage clients to begin with a PoC to validate approach, integration, and outcome.

We work with clients across Globe including India, North America, Europe, the Middle East, and Asia-Pacific with teams available to work in multiple time zones.

Latest Articles

Let's Start

Initiating Your Journey to Success and Growth.

- 01Share your requirements

- 02Discuss them with our experts

- 03Get a free quote

- 04Start the project